Goodbye legal compliance and data quality issues

Stop building on sand: When you collect analytics events and other PII, legal and technical limitations erode your data. Our privacy-first approach captures as much data as legally and technically possible, supplemented by synthetic data points, to provide you with one central, rock-solid foundation for everything:

-

For teams

-

For use cases

-

For tools

-

For compliance

Marketing & Product

Data & Engineering

Marketing & Ecommerce

Customer & Product

Management & Other

Marketing & Analytics

Advertising & Data

Cloud Platforms

Avoid this problem: Non-compliant or incomplete data can sink you

Everything you’ve built can be severely damaged or washed away completely, if the data you are using in your analytics tools, your marketing technologies, or your data pipelines, including machine learning and AI, turns out to be illegal and/or has significant quality issues:

| Damage | Root cause |

|---|---|

| Fines | Non-compliant data |

| Reputational damage | Illegal privacy invasion |

| Tools don’t work as intended | Unreliable, low-quality data |

| Numbers are incorrect | Incomplete or wrong data |

| Work has to be destroyed | Illegal input data, e.g. to train AI |

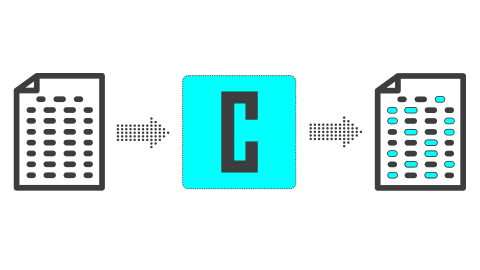

Our approach: Rock-solid data that is both compliant and complete

Contrary to popular belief, lawful data doesn’t mean less data. It can still be complete, but it requires a much more sophisticated approach:

We call it the Data Cape because of its ability to resist data erosion and data loss, similar to how a geological cape is able to resist land erosion.

Likewise, a data cape provides a solid foundation that can withstand even the worst storm, or in other words: Your tools and use cases always work.

Our solution: Ready-to-use analytics data, compliant and complete

Our services: We gather as much data as possible

Achieving compliance and completeness starts at the source, where the data is first created. Because that’s not easy, our platform comes with our initial and ongoing implementation services to ensure the best possible data quality, both legally and technologically.

We collect user events, also known as digital analytics, clickstream, or behavioral data, from websites and mobile apps, and other user-facing systems, for example CRMs.

Our platform: We assure your data’s quality and compliance

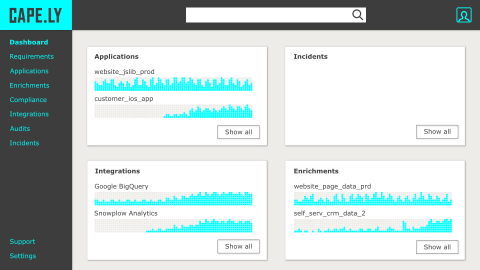

Our compliance platform receives all the user event data and distributes it to the consumers you specify, e.g. cloud platforms, data pipelines, analytics tools, and marketing technologies.

The user interface allows you to manage your data requirements, configure compliance measures, perform audits, and set up any modifications you want to apply to the data.

Your company: You use your data in your tools and use cases

We provide customers like you with ready-to-use data through our platform. Your work only begins when you receive it in your tools to implement your use cases. Everything before data consumption is taken care of by us, for a fixed monthly fee.

Sending the collected data to a new tool or use case is easy to set up on our platform. You should never need an additional implementation, other than the one we maintain for you.

What makes us unique: 100% focus on the data

Based on our decade of experience, one thing is clear: Tools and use cases can only work as good as the underlying data allows.That’s why we spend all our energy to create the best data possible, see our implementation services.

No time is wasted on building unrelated features. We believe that others are doing a better job and want you to use our data with your preferred third-party tools, e.g. to analyze it.

Global approach to privacy, originally from Europe

Originally from Germany, we helped German clients gather complete data that was compliant with the strict German privacy laws long before EU-wide regulations like GDPR.

Ensuring compliance while enabling downstream use cases went from a necessity to our passion. And that’s why all our packages except the Self-service Package include compliance services for at least one jurisdiction.

More than a decade of experience

The company was founded in 2013 in Germany to help medium-sized to large companies gather compliant and complete data using common digital analytics tools.

Over the past decade, we worked with companies in Europe and North America from a variety of industries, for example, retail, media, travel, telecommunications, utilities, finance and insurance.

Predictable pricing with a fixed-fee model

Using our services and platform removes any worries about unexpected expenses because they are offered at a fixed fee, including any manual labor. So for example, even if there is a data issue that requires us to spend a lot of time figuring out what happens and identifying any root causes, you don’t pay more.

Our model also greatly benefits you especially in the beginning because we don’t charge any setup fees or for the initial implementation, which naturally comes with significantly higher efforts.

Unique combination: Implementation included

Most technical platforms don’t include the services required to implement them. However, because we believe that both go hand in hand, and it requires significant efforts and knowledge to achieve compliant and complete data, we offer our platform with the corresponding implementation services as a package.

The great thing for you, especially if you are not a technical person, is that you don’t have to worry about anything else than actually using the data. We take care of everything that happens upstream before you receive the data.

Cost reductions: Eliminating redundancies

When you use our platform and our services, you are adding a data source, and most companies already have other tools that collect similar data. However, we are convinced that almost no other data source gets even close to our data regarding compliance and completeness, but also reliability and usability.

That’s why we think that over time you are able to reduce the number of data sources, saving you and your teams work, costs, and complexity. Removing these redundancies allows for a more streamlined effort to provide your organization with the data it needs to operate.

Ensure global legal compliance

Our platform takes into account a user’s consent status to ensure compliance. However, our unique approach adjusts the data at the most granular level: individual data points. Our main priority will always be compliance.

But almost equally as important is to provide as much data as possible, which is why we pursue our data-point-level approach, optimizing every single one for completeness. We use the same level of detail to scan for and prevent accidental leaks of PII.

Guaranteed the most complete data

Our solution is the result of more than a decade of maximizing data for use cases while ensuring compliance. That’s why we optimize every individual data point. When users consented to the processing of their PII, there is generally no problem.

However, if the consent is limited or none given at all, our platform has to make adjustments. Without consent, our platform randomizes PII while persisting as much non-PII information as possible, for example to allow ad campaign conversion measurement.

Reliably achieve your work objectives

When we talk to people that use data in their job, non-compliant and non-existent data comes up all the time. They complain about the data’s quality and legal uncertainty.

We want to give data workers peace of mind and allow them to focus on their actual work objectives. We use more than a decade of experience to enable all kinds of use cases.

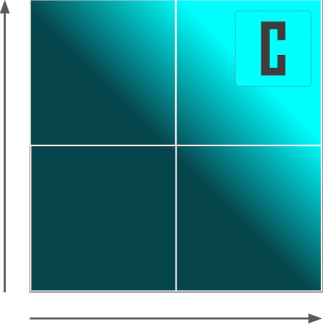

How do you compare in our Solidness Quadrant?

Legal compliance

Data completeness

The Solidness Quadrant provides a graphical comparison of companies based on their data’s solidness, as measured by its completeness and its legal compliance.